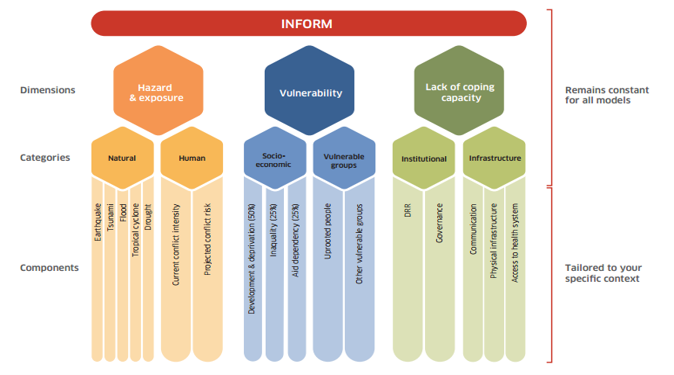

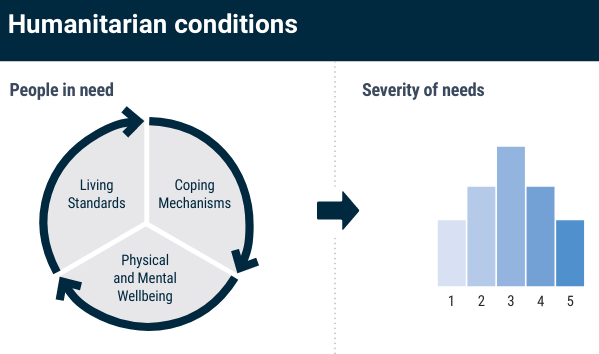

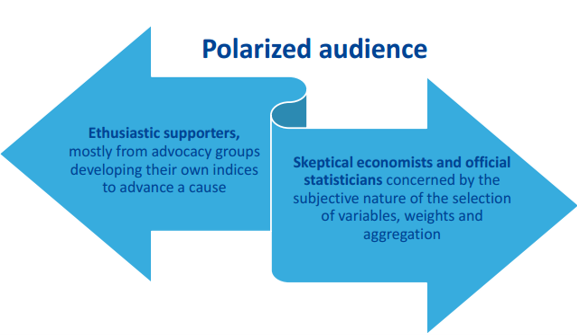

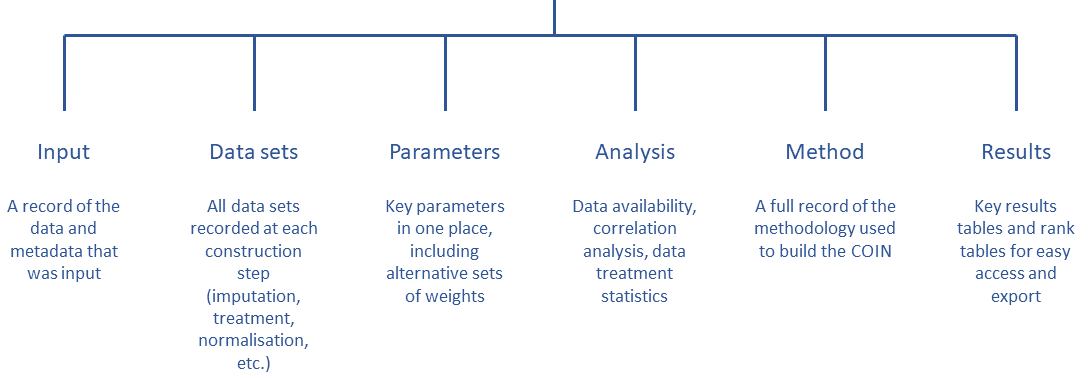

class: center, middle, inverse, title-slide # Building Humanitarian Severity Index with R, COINr & Compind ## Ensure Statistical Rigor & Robustness ### ### Last update: 2022-01-19 --- layout: true <div class="my-footer"><span></span></div> --- # Humanitarian Severity Index .pull-left[ > "_To support the prioritization of needs, Humanitarian Country Teams have the option to use a standardized tool based on a severity ranking approach. The tool provides a method and structure to prioritize needs by categorising and weighing indicators along geographical areas, sectors, inter-sectoral aspects and demographics._" [Humanitarian Needs Comparison Tool](https://www.humanitarianresponse.info/sites/www.humanitarianresponse.info/files/documents/files/Comparison Tool Guidance 2015 FINAL-EN.pdf#page=12) Crisis severity is indeed a complex, multi-factorial construct -> The challenge is to summarize and __condense__ the information of a plurality of underlying indicators into a single measure, in a way that __accurately__ reflects the underlying concept ] .pull-right[ Each index acts as a metric to quantify and to compare the situation of different admin units. Example from JRC Global [INFORM]((http://www.inform-index.org), an index designed to assess humanitarian risks for 191 countries at the national level.  ] ??? https://drmkc.jrc.ec.europa.eu/inform-index/Portals/0/InfoRM/Severity/INFORM%20Severity%20Index%20User%20Guide%20v01%20October%202020.pdf --- class: inverse, left, middle # Aid organisations and donors commit to... See Grand Bargain Commitment #5: [Improve joint and impartial needs assessments](https://interagencystandingcommittee.org/improve-joint-and-impartial-needs-assessments) > '_Dedicate resources and involve independent specialists within the clusters to strengthen [..] analysis in a __fully transparent__, collaborative process, which includes a brief summary of the methodological and analytical limitations of the assessment._" ??? Notes - --- ## Joint Interagency Analysis Framework (JIAF) .pull-left[ Implementing Grand Bargain Commitment, the [JIAF](https://www.jiaf.info) defines: * a list of [curated indicators](https://eur02.safelinks.protection.outlook.com/?url=https%3A%2F%2Fdocs.google.com%2Fspreadsheets%2Fd%2F1M8Nn8cI0o-l2hbgQ9zXl5Dib8nmh3r-bQSbWtLwhXV8%2Fedit%23gid%3D0&data=04%7C01%7Clegoupil%40unhcr.org%7Cfcee88419ef346edab1908d9cf80b85f%7Ce5c37981666441348a0c6543d2af80be%7C0%7C0%7C637768975435830277%7CUnknown%7CTWFpbGZsb3d8eyJWIjoiMC4wLjAwMDAiLCJQIjoiV2luMzIiLCJBTiI6Ik1haWwiLCJXVCI6Mn0%3D%7C3000&sdata=3fRLybk9VHL9kEw7Zvgoi%2Fr2pTrwSK7Ib9%2BOuMf%2FdjQ%3D&reserved=0) * guidance for the [formula](https://www.jiaf.info/wp-content/uploads/2021/07/JIAF-1.1.pdf#page=40) * a [theoretical framework](https://www.jiaf.info/wp-content/uploads/2021/05/Technical-briefing-note-v3-May-2021-1.pdf) to define the main components of humanitarian severity 1. Living Standards 2. Coping Mechanism 3. Physical & Mental Wellbeing ] .pull-right[  ] ??? Notes - --- ## The risk with not statistically-sound index > "_And so we end up relying on a lot of expert judgment, which in effect means that we’re faking it--we have a process and looks like it’s very rigorous, and in the end is just being done by people’s...assumptions._" .pull-left[ Conceptual and statistical flaws in the calculation of Humanitarian Severity can greatly limit its usefulness * Are indicators __substituable__? i.e. shall we allow one indicator to compensate another one? * Are all indicators "really useful" to the final measurement, i.e. correctly __depicting__ the severity? * Does the way the indicators are 'assembled" together correctly reflect their true respective __importance__? * Can the methodology be fully __positively audited__? ] .pull-right[  ] ??? Composite indicators are developed to address the challenge that comes with the aggregation of heterogeneous information. They are developed to convey consistent policy messages Because of their very nature, composite indicators cannot be validated versus a ground truth and are always a compromise and as a result tends to create polarized audiences. Some cons: Composite indicators may send misleading, non-robust policy messages if they are poorly constructed or misinterpreted. Sensitivity analysis can be used to test composite indicators for robustness. The simple “big picture” results which composite indicators show may invite politicians to draw simplistic policy conclusions. Composite indicators should be used in combination with the sub-indicators to draw sophisticated policy conclusions. The construction of composite indicators involves stages where judgement has to be made: the selection of sub-indicators, choice of model, weighting indicators and treatment of missing values etc. These judgements should be transparent and based on sound statistical principles. Notes - Composite indicators typically seek to reduce distinct quality measures into a single summary indicator. The methods underlying such simplifications should be clear and transparent. Too often, however, composite indicators are presented with limited or no information about the derivation and interpretation of constituent measures. The technical information required to understand how composite indicators were designed is sometimes not published5 or is not reported alongside the actual composite indicator. Some measures are used without clear conceptual justification A key assumption underlying the use of composite indicators is that the constituent parts together give a fair summary of the whole Many composite indicator schemes apply threshold-based classification rules to standardise disparate individual measures to a consistent scale. Measures that are naturally continuous are mapped to categorical bands before being combined into the overall composite The weighting assigned to individual measures contributing to composites is another problem area. As few hospitals perform equally well in all areas, performance can be artificially improved by giving higher weight to individual measures where a hospital performs better than average and vice versa. The choice of weights given to individual measures is thus a key determinant of performance on the overall composite, and different weights might allow almost any rank to be achieved.31 32 Therefore, transparency is needed about the importance attached to each measure in terms of the aim of the indicator, with supporting evidence. However, many schemes do not provide explicit justification for the weights used to create the composite https://www.medrxiv.org/content/10.1101/2020.12.08.20246256v1.full https://qualitysafety.bmj.com/content/28/4/338 --- ## Follow the recipe .pull-left[ In the development area, there has been long focus to improve the quality of index through methodology nd user guide such as [Handbook on Constructing Composite Indicators](https://www.oecd.org/sdd/42495745.pdf), the [Ten Step Guide](https://knowledge4policy.ec.europa.eu/publication/your-10-step-pocket-guide-composite-indicators-scoreboards_en) & the [pocket Guide](https://knowledge4policy.ec.europa.eu/sites/default/files/10-step-pocket-guide-to-composite-indicators-and-scoreboards.pdf) . To bring in the humanitarian world, the same level of statistical quality than in development (aka making the "__nexus__"), as soon as Humanitarian Severity is defined and indicators preselected, and before we can visualise the results, the following steps are required: * Treat the data (missing value, outliers) * Explore the correlations * Standardize/normalize the data * Define the weight of each indicator * Agree on an aggregation method * Check statistical coherence & assess robustness & sensitivity ] .pull-right[  ] ??? Notes - The 10 Steps are 1. Define/map the concept 2. Select the indicators 3. Analyse and treat data 4. Normalise indicators 5. Weight indicators 6. Aggregate indicators 7. Check statistical coherence, multivariate analysis 8. Uncertainty and sensitivity analysis 9. Make sense of the data - compare with other measures and concepts 10. Visualise results and communicate key messages --- ## Using scripts rather than clicks > Shall severity index "_be easily calculable in Excel_" in order to adjust to "_realities and necessities of the field_"? .pull-left[ Excel files are good to store information... but.... __Clicking in Excel__ does not allow to record and document processes in a systematic way: * The sequence of the data treatment * The value of the data at each steps * Different options in the calculation that can be compared * Visualisation of the index measurement quality ] .pull-right[ __Scripting in R__ not only address the inherent limitation of Excel but also ensures: * Full documentation in a predictable format * Automatic index auditing functions * Quick generation of predefined visuals * Easy peer review for perfect transparency ] The [Humanitarian-user-group](https://humanitarian-user-group.github.io/post/first-meeting/) includes now 480 participants from multiple organisations and parts of the world. ??? Current guidance were provided with a complex and heavy [excel tool](https://www.humanitarianresponse.info/sites/www.humanitarianresponse.info/files/documents/files/Nigeria_HNO_Comparison_Tool_v0.1.zip) that includes many macros script and 13 pages of [narrative explanation](https://www.humanitarianresponse.info/sites/www.humanitarianresponse.info/files/documents/files/Comparison%20Tool%20Technical%20Instructions.pdf) to explain how it works. --- class: left, middle # Learning objectives This session is a tutorial to show you how to go through each steps required to do the computations in a __[reproducible](https://unhcr-americas.github.io/reproducibility/index.html) & auditable__ way. ### Stage 0. Prepare data & information ### Stage 1. Review & Process data ### Stage 2. Question the assumptions ### Stage 3. Visualize Results ??? Depending on the pace of the group, if we do not finish today we will org anise a second session https://unhcr-americas.github.io/reproducibility/index.html --- # Webinar rules <i class="fa fa-spinner fa-spin fa-fw fa-2x"></i> Leverage this opportunity and make this session __lively__ - there's no stupid questions! <i class="fa fa-check-square fa-fw fa-2x"></i> Use the __chat__ to send your questions - we are two facilitators and one is focused on replying all questions directly in the chat while the session is on-going <i class="fa fa-pencil fa-fw fa-2x"></i> No need to take notes, all the session __content__ will be shared <i class="fa fa-cog fa-fw fa-2x"></i> All practical exercises are designed to get you __testing the commands__: > Login on a dedicated cloud-based version of RStudio with base packages pre-installed for this session @ > Paste the command from the chat to your online Rstudio session and check what is happening > In case it is not working as expected, share screenshot or error messages from the console in the chat --- ### The COIN Object A dedicated package, [COINr](https://bluefoxr.github.io/COINrDoc) has been created by the European Joint Research center to facilitate the creation of composite indicators. it includes __all steps & components__ to support the process.  ??? Notes - * `.$Input` is all input data and metadata that was initially used to construct the COIN. * `.$Data` consists of data frames of indicator data at each construction step, e.g. `.$Data$Raw` is the raw data used to construct the COIN, `.$Data$Normalised` is the same data set after normalisation, and so on. * `.$Parameters` contains parameters such as sets of weights, details on the number of indicators, aggregation levels, etc. * `.$Method` is a record of the methodology applied to build the COIN. In practice, this records all the inputs to any COINr functions applied in building the COIN, for example the normalisation method and parameters, data treatment specifications, and so on. Apart from keeping a record of the construction, this also allows the entire results to be regenerated using the information stored in the COIN. This will be explained better in [Adjustments and comparisons]. * `.$Analysis` contains any analysis tables which are sorted by the data sets to which they are applied. This can be e.g. indicator statistics of any of the data sets found in `.$Data`, or correlations. * `.$Results` contains summary tables of results, arranged and sorted for easy viewing. --- ## The COIN Sequence All COINr functions work through a sequence: Function | Description ------------------| --------------------------------------------- `assemble()` | Assembles indicator data/metadata into a COIN `checkData()` | Data availability check and unit screening `denominate()` | Denominate (divide) indicators by other indicators `impute()` | Impute missing data using various methods `treat()` | Treat outliers with Winsorisation and transformations `normalise()` | Normalise data using various methods `aggregate()` | Aggregate indicators into hierarchical levels, up to index Access to various weighting approach are provided by the COMPIND package in addition, a series of plotting and dashboarding functions are available --- class: inverse, left, middle # Stage 0. Prepare data & information * Document metadata * Specify Hierarchy * Organise data in tabular format --- ## Indicator data `IndData`: Each row is an observation and each column is a variable: * `UnitName`: name of each unit of observation: municipality, province or whatever admin level used to measure severity. * `UnitCode`: unique code assigned to each unit (ideally pcode as registered in HDX). This can be then used to facilitate map creation * `Year` Optional information to provide the reference year of the data. * Any column names that begin with `Den_*` are recognized as *denominators*, i.e. indicators that are used to scale other indicators with the `denominate()` function. * Any column name that starts with `Group_*` is recognized as a group column rather than an indicator. This is optional and some charts also recognize groups. * Any column that begins with `x_*` will be ignored and passed through. This is useful for e.g. alternative codes or other variables that you want to retain. ??? Notes - *Any remaining columns that do not begin with `x_` or use the other names in this list are recognised as indicators.* You will notice that all column (variable/indicator) names use short codes. This is to keep things concise in tables, rough plots etc. Indicator codes should be short, but informative enough that you know which indicator it refers to (e.g. "Ind1" is not so helpful). In some COINr plots, codes are displayed, so you might want to take that into account. In any case, the full names of indicators, and other details, are also specified in the indicator metadata table - see the next section. Some important rules and tips to keep in mind are: * The following columns are *required*. All other columns are optional (they can be excluded): - UnitCode - UnitName (if you don't have separate names you can just replicate the unit codes) - At least one indicator column * Columns don't have to be in any particular order, columns are identified by names rather than positions. * You can have as many indicators and units as you like. * Indicator codes and unit codes must have unique names. You can't use the same code twice otherwise bad things will happen. * Avoid any accented characters or basically any characters outside of English - this can sometimes cause trouble with encoding. * Column names are case-sensitive. Most things in COINr are built to have a degree of flexibility where possible, but *column names need to be written exactly as they appear here for COINr to recognise them*., --- ## Document Indicators: `IndMeta`: Indicator metadata * `IndName`: Full name of the indicator, which will be used in display plots. * `IndCode`: Reference unique code for each indicator. * `Direction`: takes values of either 1 or -1 for each indicator. A value of 1 means that higher values of the indicator correspond to higher values of the index, whereas -1 means the opposite. * `IndWeight`: initial weights assigned to each indicator. Weights are relative within each aggregation group and do not need to sum to one (they will be re-scaled to sum to one within each group). * `Denominator`: indicator codes of one of the denominator variables for each indicator to be denominated. E.g. here "Den_Pop" specifies that the indicator should be denominated by the "Den_Pop" indicators (population, in this case). For any indicators that do not need denominating, just set `NA`. * `IndUnit`: helps for keeping track of what the numbers actually mean, and can be used in plots. * `Target`: Optional information used if the normalisation method is distance-to-target. * Any column name that begins with `Agg` is recognised as a column specifying the aggregation group, and therefore the structure of the index. Aggregation columns *should be in the order of the aggregation*, but otherwise can have arbitrary names. ??? Notes - * `IndName` [**required**] This is the full name of the indicator, which will be used in display plots. * `IndCode`[**required**] A reference code for each indicator. These *must be the same codes as specified in the indicator metadata*. The codes must also be unique. * `Direction` [**required**] The "direction" of each indicator - this takes values of either 1 or -1 for each indicator. A value of 1 means that higher values of the indicator correspond to higher values of the index, whereas -1 means the opposite. * `IndWeight` [**required**] The initial weights assigned to each indicator. Weights are relative within each aggregation group and do not need to sum to one (they will be re-scaled to sum to one within each group by COINr). * `Denominator` [**optional**] These should be the indicator codes of one of the denominator variables for each indicator to be denominated. E.g. here "Den_Pop" specifies that the indicator should be denominated by the "Den_Pop" indicators (population, in this case). For any indicators that do not need denominating, just set `NA`. Denominators can also be specified later, so if you want you can leave this column out. See [Denomination] for more information. * `IndUnit` [**optional**] The units of the indicator. This helps for keeping track of what the numbers actually mean, and can be used in plots. * `Target` [**optional**] Targets associated with each indicator. Here, artificial targets have been generated which are 95% of the maximum score (accounting for the direction of the indicator). These are only used if the normalisation method is distance-to-target. * `Agg*` [**required**] Any column name that begins with `Agg` is recognised as a column specifying the aggregation group, and therefore the structure of the index. Aggregation columns *should be in the order of the aggregation*, but otherwise can have arbitrary names. --- ## Describe dimensions and nested levels: `AggMeta`: Aggregation metadata: This data frame simply consists of four columns, all of which are required for assembly: * `AgLevel`: aggregation level (where 1 is indicator level, 2 is the first aggregation level, and so on * `Code`: aggregation group codes must match the codes in the corresponding column in the indicator metadata aggregation columns. * `Name`: The aggregation group names. * `Weight`: aggregation group weights, that can be changed later on if needed. ??? Notes - This data frame simply consists of four columns, all of which are required for assembly: * `AgLevel` [**required**] The aggregation level (where 1 is indicator level, 2 is the first aggregation level, and so on -- see Section \@ref(Sec:ContructingCIs)) * `Code` [**required**] The aggregation group codes. These codes must match the codes in the corresponding column in the indicator metadata aggregation columns. * `Name` [**required**] The aggregation group names. * `Weight` [**required**] The aggregation group weights. These weights can be changed later on if needed. The codes specified here must be unique and not coincide with any other codes used for defining units or indicators. All columns are required, but do not need to be in a particular order. --- ## Load data and assemble > "_All original datasets should be uploaded on the Humanitarian Data Exchange platform for transparency purposes and to ensure reproducibility of results_" [JIAF ](https://www.jiaf.info/wp-content/uploads/2021/07/JIAF-1.1.pdf#page=44) .pull-left[ To build a composite indicator, the analysts needs to __prepare and assemble in Excel__ three data frames, according to a specific format with predefined column name. ```r library(COINr) library(dplyr) library(reactable) library(magrittr) # devtools::install_github("vqv/ggbiplot") library(ggbiplot) ASEM <- assemble( IndData = ASEMIndData, IndMeta = ASEMIndMeta, AggMeta = ASEMAggMeta ) ``` ] .pull-right[ ] ??? Notes -Apart from building the COIN, `assemble()` does a few other things: * It checks that indicator codes are consistent between indicator data and indicator metadata * It checks that required columns, such as indicator codes, are present * It returns some basic information about the data that was input, such as the number of indicators, the number of units, the number of aggregation levels and the groups in each level. This is done so you can check what you have entered, and that it agrees with your expectations. --- class: inverse, left, middle # Stage 1. Review & Process data 1. Indicator statistics 2. Missing data 3. Denominator 4. Outliers 5. Normalisation 6. Aggregation --- ## Check the indicator statistics .pull-left[ It is advisable to do this both on the raw data, and the normalised/aggregated data. Particular things of interest : * whether indicators or aggregates are highly skewed, * the percentage of missing data for each indicator, * the percentage of unique values, low data availability and zeroes, * the presence of correlations with denominators, and negative correlations ```r # We can change thresholds for flagging outliers and high/low correlations. ASEM <- getStats(ASEM, dset = "Raw") names(ASEM$Analysis$Raw$StatTable) # view stats within a table ASEM$Analysis$Raw$StatTable %>% roundDF() %>% reactable() ``` ] .pull-right[ Example: <div id="htmlwidget-a21d0dc38c517b3076a6" class="reactable html-widget" style="width:auto;height:auto;"></div> <script type="application/json" data-for="htmlwidget-a21d0dc38c517b3076a6">{"x":{"tag":{"name":"Reactable","attribs":{"data":{"Indicator":["Goods","Services","FDI","PRemit","ForPort","CostImpEx"],"Skew":[2.65,1.7,2.1,1.81,2.02,2.69],"Kurtosis":[8.27,2.38,4.89,2.94,3.3,9.84],"Prc.complete":[100,100,100,100,94.12,100],"SK.outlier.flag":["Outliers","OK","Outliers","OK","OK","Outliers"]},"columns":[{"accessor":"Indicator","name":"Indicator","type":"character"},{"accessor":"Skew","name":"Skew","type":"numeric"},{"accessor":"Kurtosis","name":"Kurtosis","type":"numeric"},{"accessor":"Prc.complete","name":"Prc.complete","type":"numeric"},{"accessor":"SK.outlier.flag","name":"SK.outlier.flag","type":"character"}],"defaultPageSize":10,"paginationType":"numbers","showPageInfo":true,"minRows":1,"dataKey":"971deae771f383a7df26b1d3478b4651","key":"971deae771f383a7df26b1d3478b4651"},"children":[]},"class":"reactR_markup"},"evals":[],"jsHooks":[]}</script> ] ??? Notes - Apart from the overall statistics for each indicator, `getStats` also returns a few other things: * `.$Outliers`, which flags individual outlying points using the relation to the interquartile range * `.$Correlations`, which gives a correlation matrix between all indicators in the data set * `.$DenomCorrelations`, which gives the correlations between indicators and any denominators At this point you may decide to check individual indicators, and some may be added or excluded. --- ## View missing data by group .left-column[ ```r ASEM <- checkData(ASEM, dset = "Raw") #names(ASEM$Analysis$Raw$MissDatByGroup) ASEM$Analysis$Raw$MissDatByGroup %>% reactable::reactable() ``` ] .right-column[ Example: <div id="htmlwidget-a213809cf48b8d538120" class="reactable html-widget" style="width:auto;height:auto;"></div> <script type="application/json" data-for="htmlwidget-a213809cf48b8d538120">{"x":{"tag":{"name":"Reactable","attribs":{"data":{"UnitCode":["AUT","BEL","BGR","HRV","CYP","CZE"],"ConEcFin":[100,100,100,100,100,100],"Instit":[100,100,100,100,100,100],"P2P":[100,100,100,100,100,100],"Physical":[100,100,100,100,100,100]},"columns":[{"accessor":"UnitCode","name":"UnitCode","type":"character"},{"accessor":"ConEcFin","name":"ConEcFin","type":"numeric"},{"accessor":"Instit","name":"Instit","type":"numeric"},{"accessor":"P2P","name":"P2P","type":"numeric"},{"accessor":"Physical","name":"Physical","type":"numeric"}],"defaultPageSize":10,"paginationType":"numbers","showPageInfo":true,"minRows":1,"dataKey":"87c27b7a789a7fcbe0c24e4b32e37d78","key":"87c27b7a789a7fcbe0c24e4b32e37d78"},"children":[]},"class":"reactR_markup"},"evals":[],"jsHooks":[]}</script> ] --- ## Add Denomination .pull-left[ To be able to compare small countries with larger ones, you may need to divide indicators by e.g. GDP or population , you can use `denominate()`. The specifications are either made initially in `IndMeta`, or as arguments to `denominate()`. In the case of the ASEM data set, these are included in `IndMeta` so the command is very simple (run `View(ASEMIndMeta)` to see). We will afterwards check the new stats to see what has changed. ```r # create denominated data set ASEM <- denominate(ASEM, dset = "Raw") # get stats of denominated data ASEM <- getStats(ASEM, dset = "Denominated") # view stats table ASEM$Analysis$Raw$StatTable %>% reactable() ``` ] .pull-right[ <div id="htmlwidget-7216e1677fc09c4b4aa7" class="reactable html-widget" style="width:auto;height:auto;"></div> <script type="application/json" data-for="htmlwidget-7216e1677fc09c4b4aa7">{"x":{"tag":{"name":"Reactable","attribs":{"data":{"Indicator":["Goods","Services","FDI","PRemit","ForPort","CostImpEx","Tariff","TBTs","TIRcon","RTAs","Visa","StMob","Research","Pat","CultServ","CultGood","Tourist","MigStock","Lang","LPI","Flights","Ship","Bord","Elec","Gas","ConSpeed","Cov4G","Embs","IGOs","UNVote","Renew","PrimEner","CO2","MatCon","Forest","Poverty","Palma","TertGrad","FreePress","TolMin","NGOs","CPI","FemLab","WomParl","PubDebt","PrivDebt","GDPGrow","RDExp","NEET"],"Min":[7.23391,1.37855,0.13,0.166212673,0.00189,0,0,1,0,1,1,1.77,175,0.3,0.00266,0.046,0.125,0.0817,0.018848483,2.067254,0.98951,0,0,0,0.00841,5.5,0,28,82,35.75571975,0.014935036,53.47854269,0.297200884,2.577148198,0.326390729,0,0.88,2.00915,8,1.1,0,21,0.297008553,4.8583,2.81,19.67392582,-0.677398726,0.08466,0.6],"Max":[1919.194,657.109,75.6,30.20990534,10601.75,992,10.53,1754,1,46,92,445,96337,2771.7,9.56996,74.468,82.57,10.9285,21.48526056,4.225967,210.8244,21.1719761,122,110.1795628,94.8,28.6,100,100,329,43.18604666,64.92377591,192.4508294,22.12470124,38.38107313,31.84554345,22.7,2.62,37.60498,87,9.8,1824,89,1.027471558,43.553,247.98,421.3852734,7.579331501,4.22816,42.1],"Mean":[289.655230392157,128.976915490196,12.5282352941176,6.5646564537451,1577.42245583333,124.527450980392,2.460625,794.84,0.745098039215686,23.921568627451,69.1764705882353,64.08,16340.3333333333,333.577777777778,1.44536872340426,9.99920454545455,15.0599411764706,2.48286254901961,9.31297102596078,3.41187103921569,38.6248649019608,11.9846482901961,23.6078431372549,16.2337490582941,10.033378627451,14.2860465116279,75.643137254902,70.8627450980392,198.21568627451,40.9085279423529,22.6574337905882,106.0809069266,6.70618701223529,16.0157883236078,5.94544522001961,2.43636363636364,1.33511111111111,21.9474554545455,38.4313725490196,5.39103657078431,220.490196078431,57.3725490196078,0.818128034122449,24.4039235294118,62.955,140.4819133182,3.21768390711765,1.50067872340426,12.5918367346939],"Median":[142.6095,54.06682,6.1,4.691557569,259.22195,45,1.6,1140.5,1,30,79,33.2,7731,112.6,0.57832,3.3045,9.205,1.40403,9.103447457,3.420043,25.64994,12.6898376,18,6.911237833,1.14,14.6,91,75,197,42.52486565,17.17004755,96.628885555,5.935712482,15.01561584,4.984798325,0.4,1.25,23.349525,28,5.1,66,57,0.863333365,23.703,52.095,128.4633291,3.526781655,1.28773,10.9],"Q.5":[8.86104,4.257325,0.3745,0.394401418,6.3544315,0,0.682,2.45,0,6.5,17,4.015,296,3.82,0.020264,0.393,0.685,0.16039,0.058611452,2.53846,2.046485,0,0,0,0.02785,6.93,4.5,40.5,91,37.0289611,2.133072858,59.52611685,0.4484509815,4.2026474595,1.2074743395,0,0.888,8.523555,11.5,2,1,29,0.5708348784,9.65675,18.6835,24.797607571,0,0.143376,4],"Q.25":[44.056335,15.74111,2.21,1.3284886695,46.9796675,0,1.6,117.25,0.5,17,61,13.55,1724,23.7,0.12698,1.21275,3.167,0.617915,4.7844799725,2.9888285,6.766135,9.09473575,8.5,1.1994055,0.3765,10.95,65.25,52,163.5,38.55354989,9.1309509865,78.386059055,4.288568985,11.00078918,2.680571254,0,1.1,16.83868,20.5,3.6,22.5,40.5,0.797709816,18.0952,34.8275,76.3130178225,1.500441624,0.698315,7],"Q.75":[353.4318,199.0231,17.15,7.869372995,1588.92625,155.5,1.865,1185,1,30,81,69.55,20205,437.6,1.89308,11.79725,17.3565,4.05015,14.146422985,3.80534,46.12665,16.80465635,32,22.917848,12.6,16.9,98.35,85,234,42.750592245,33.78263446,128.519078,8.775045819,18.78079666,6.560708454,1.1,1.48,28.375165,55.5,6.8,187.5,75,0.895348577,30.77675,82.0325,189.7215823,4.8964907175,2.199805,15.3],"Q.95":[814.11775,494.75935,44.4,23.62377878,7260.43965,357,9.0385,1389.4,1,30.5,87.5,250.5,67515,1315.3,6.36414399999999,40.14465,55.821,8.04576,19.311049795,4.1960615,142.5818,20.55922925,68.5,59.05141575,39.3,22.42,100,100,286.5,43.10103234,58.543931175,172.64365511,15.109478465,33.174814945,15.23627423,17.905,2.084,34.100282,84.5,8.45,1028.5,85,0.9486528234,39.39395,131.0315,277.942729575,6.234377299,3.205538,27.7],"IQ.range":[309.375465,183.28199,14.94,6.5408843255,1541.9465825,155.5,0.265,1067.75,0.5,13,20,56,18481,413.9,1.7661,10.5845,14.1895,3.432235,9.3619430125,0.8165115,39.360515,7.7099206,23.5,21.7184425,12.2235,5.95,33.1,33,70.5,4.197042355,24.6516834735,50.133018945,4.486476834,7.78000748,3.8801372,1.1,0.38,11.536485,35,3.2,165,34.5,0.097638761,12.68155,47.205,113.4085644775,3.3960490935,1.50149,8.3],"Std.dev":[388.199568743154,161.486403807352,15.7936612887427,7.36909142748543,2587.10738170865,181.95193000178,2.37800059611144,550.397087082942,0.440142579394539,9.38476027878156,22.1663762328017,86.552290114127,23136.9014309753,526.714200530424,2.09339171493828,15.0199048902008,18.3603071111698,2.5333486364019,6.34966010038487,0.538476182161587,46.7222749837091,6.83536372502865,24.8177987995491,22.6707160759585,17.5131489287945,5.10646562121418,32.1456840953927,20.0579356942265,58.6253575598444,2.38163355245216,17.1991880924677,35.4450598860987,4.54881942243597,8.2097963694891,5.63158085323983,5.50881285101283,0.374606594990132,8.52314264817321,24.4198729742485,2.15366148725598,387.492212698476,19.7969298471392,0.141116797101997,9.72405914265413,43.65082794876,84.8866760658072,2.04210968952599,1.01796515190764,7.7396392232534],"Skew":[2.64997325440064,1.70108492961865,2.10282278366424,1.80878916414948,2.02003190527394,2.69142601371096,2.44917948805346,-0.395674578932419,-1.15917910585813,-0.678014308269118,-1.76181098200601,2.72797534516909,2.22186365367817,2.85116283541283,2.47062411262379,2.6078626587636,2.19038863755193,1.53821593371074,0.0933840143573727,-0.304268128297048,2.10328719405155,-0.575668041929831,2.14823598917068,2.22527362397486,2.8294485676336,0.46220372370792,-1.37251912510789,-0.367952855467896,-0.0634717804573101,-0.675399422731595,0.822493598146914,0.749589375068268,1.10715761602933,0.75928568176863,2.71126766413492,2.92128092027989,1.32671553064854,-0.383410823627673,0.753917389948978,0.0633328034097616,2.78947906349471,0.0202750630403466,-2.05581276054001,0.0858880311895876,1.99943007183029,0.95719599688544,-0.0516054308910296,0.609541137027055,1.60096391414841],"Kurtosis":[8.26660952313818,2.37565572047974,4.89206341076569,2.93807022141744,3.29996357325386,9.84263323082698,5.41091882258184,-1.54545518184887,-0.684747583243824,0.133048185203679,2.16514756216367,8.3051069070947,4.81307642892378,10.0569263602903,6.38553972224239,7.73771869891281,4.90298373058199,2.05750463128345,-1.07685476154497,-0.656751412485129,4.50887936970066,-0.681479454104936,5.79149049252436,5.7910267631817,10.3346493687986,0.187321419069999,0.541931446910913,-0.993112250617892,-0.248981004779727,-1.20433214215785,-0.066097797313857,-0.146736281013745,1.83219799402873,0.424487166791781,9.34783589457403,7.87851731090618,2.30031331440488,-0.303413988992342,-0.780349481437203,-0.660276237839447,7.91742478522325,-1.24970883905041,5.02431792598603,-0.789179358193493,5.97160464667763,1.42863670983772,-0.93031220759653,-0.275571451201434,3.63194790902608],"N.missing":[0,0,0,0,3,0,3,1,0,0,0,0,0,6,4,7,0,0,0,0,0,0,0,0,0,8,0,0,0,0,0,1,0,0,0,7,6,7,0,0,0,0,2,0,1,1,0,4,2],"Prc.complete":[100,100,100,100,94.1176470588235,100,94.1176470588235,98.0392156862745,100,100,100,100,100,88.2352941176471,92.156862745098,86.2745098039216,100,100,100,100,100,100,100,100,100,84.3137254901961,100,100,100,100,100,98.0392156862745,100,100,100,86.2745098039216,88.2352941176471,86.2745098039216,100,100,100,100,96.078431372549,100,98.0392156862745,98.0392156862745,100,92.156862745098,96.078431372549],"Low.data.flag":["OK","OK","OK","OK","OK","OK","OK","OK","OK","OK","OK","OK","OK","OK","OK","OK","OK","OK","OK","OK","OK","OK","OK","OK","OK","OK","OK","OK","OK","OK","OK","OK","OK","OK","OK","OK","OK","OK","OK","OK","OK","OK","OK","OK","OK","OK","OK","OK","OK"],"Prc.Unique":[1,1,1,1,0.96078431372549,0.568627450980392,0.411764705882353,0.843137254901961,0.0392156862745098,0.274509803921569,0.431372549019608,0.980392156862745,1,0.901960784313726,0.941176470588235,0.882352941176471,1,1,1,1,1,0.843137254901961,0.588235294117647,0.901960784313726,1,0.803921568627451,0.725490196078431,0.647058823529412,0.901960784313726,0.96078431372549,1,1,1,1,1,0.352941176470588,0.705882352941177,0.882352941176471,0.745098039215686,0.784313725490196,0.92156862745098,0.764705882352941,0.980392156862745,0.96078431372549,1,1,0.980392156862745,0.941176470588235,0.803921568627451],"Frac.Zero":[0,0,0,0,0,0.352941176470588,0.0392156862745098,0,0.254901960784314,0,0,0,0,0,0,0,0,0,0,0,0,0.176470588235294,0.117647058823529,0.0784313725490196,0,0,0.0392156862745098,0,0,0,0,0,0,0,0,0.274509803921569,0,0,0,0,0.0196078431372549,0,0,0,0,0,0.0392156862745098,0,0],"SK.outlier.flag":["Outliers","OK","Outliers","OK","OK","Outliers","Outliers","OK","OK","OK","OK","Outliers","Outliers","Outliers","Outliers","Outliers","Outliers","OK","OK","OK","Outliers","OK","Outliers","Outliers","Outliers","OK","OK","OK","OK","OK","OK","OK","OK","OK","Outliers","Outliers","OK","OK","OK","OK","Outliers","OK","Outliers","OK","OK","OK","OK","OK","OK"],"Low.Outliers.IQR":[0,0,0,0,0,0,5,0,0,0,5,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,5,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,7,0,0,0,0,0,0],"High.Outliers.IQR":[3,3,4,4,7,2,12,0,0,0,0,4,4,4,4,5,4,1,0,0,5,0,3,3,5,1,0,0,0,0,0,0,2,4,5,7,3,0,0,0,7,0,0,0,2,1,0,0,3],"Collinearity":["Collinear","OK","OK","OK","OK","OK","OK","OK","OK","OK","OK","OK","Collinear","OK","OK","Collinear","OK","OK","OK","OK","OK","OK","OK","OK","OK","OK","OK","OK","OK","OK","OK","OK","OK","OK","OK","OK","OK","OK","OK","OK","OK","OK","OK","OK","OK","OK","OK","OK","OK"],"Neg.Correls":[1,1,1,0,6,16,15,7,5,9,9,2,0,1,2,0,0,4,9,9,1,0,1,6,1,6,9,0,7,11,10,8,6,6,1,17,14,10,18,18,2,9,6,5,1,8,18,7,19],"Denom.correlation":["High","High","High","High","OK","OK","OK","OK","OK","OK","OK","High","High","OK","OK","High","OK","OK","OK","OK","OK","OK","OK","OK","OK","OK","OK","OK","OK","OK","OK","OK","OK","OK","OK","OK","OK","OK","OK","OK","OK","OK","OK","OK","OK","OK","OK","OK","OK"]},"columns":[{"accessor":"Indicator","name":"Indicator","type":"character"},{"accessor":"Min","name":"Min","type":"numeric"},{"accessor":"Max","name":"Max","type":"numeric"},{"accessor":"Mean","name":"Mean","type":"numeric"},{"accessor":"Median","name":"Median","type":"numeric"},{"accessor":"Q.5","name":"Q.5","type":"numeric"},{"accessor":"Q.25","name":"Q.25","type":"numeric"},{"accessor":"Q.75","name":"Q.75","type":"numeric"},{"accessor":"Q.95","name":"Q.95","type":"numeric"},{"accessor":"IQ.range","name":"IQ.range","type":"numeric"},{"accessor":"Std.dev","name":"Std.dev","type":"numeric"},{"accessor":"Skew","name":"Skew","type":"numeric"},{"accessor":"Kurtosis","name":"Kurtosis","type":"numeric"},{"accessor":"N.missing","name":"N.missing","type":"numeric"},{"accessor":"Prc.complete","name":"Prc.complete","type":"numeric"},{"accessor":"Low.data.flag","name":"Low.data.flag","type":"character"},{"accessor":"Prc.Unique","name":"Prc.Unique","type":"numeric"},{"accessor":"Frac.Zero","name":"Frac.Zero","type":"numeric"},{"accessor":"SK.outlier.flag","name":"SK.outlier.flag","type":"character"},{"accessor":"Low.Outliers.IQR","name":"Low.Outliers.IQR","type":"numeric"},{"accessor":"High.Outliers.IQR","name":"High.Outliers.IQR","type":"numeric"},{"accessor":"Collinearity","name":"Collinearity","type":"character"},{"accessor":"Neg.Correls","name":"Neg.Correls","type":"numeric"},{"accessor":"Denom.correlation","name":"Denom.correlation","type":"character"}],"defaultPageSize":10,"paginationType":"numbers","showPageInfo":true,"minRows":1,"dataKey":"9b095c7e5f39da97c7c11dd9037b62ef","key":"9b095c7e5f39da97c7c11dd9037b62ef"},"children":[]},"class":"reactR_markup"},"evals":[],"jsHooks":[]}</script> ] ??? Notes -According to the new table, there are now no high correlations with denominators, which indicates some kind of success. --- ## Treat the data for outliers .pull-left[ Standard approach which Winsorises each indicator up to a specified limit of points, in order to bring skew and kurtosis below specified thresholds. If Winsorisation fails, it applies a log transformation or similar.Following treatment, it is a good idea to check which indicators were treated and how: ```r ASEM <- treat(ASEM, dset = "Denominated", winmax = 5) ASEM$Analysis$Treated$TreatSummary %>% filter(Treatment != "None") %>% reactable() ``` ] .pull-right[ <div id="htmlwidget-3a6c18fdacf57ab01955" class="reactable html-widget" style="width:auto;height:auto;"></div> <script type="application/json" data-for="htmlwidget-3a6c18fdacf57ab01955">{"x":{"tag":{"name":"Reactable","attribs":{"data":{".rownames":["V2","V3","V5","V6","V7"],"IndCode":["Services","FDI","ForPort","CostImpEx","Tariff"],"Low":[0,0,0,0,0],"High":[4,2,3,1,3],"TreatSpec":["Default, winmax = 5","Default, winmax = 5","Default, winmax = 5","Default, winmax = 5","Default, winmax = 5"],"Treatment":["Winsorised 4 points","Winsorised 2 points","Winsorised 3 points","Winsorised 1 points","Winsorised 3 points"]},"columns":[{"accessor":".rownames","name":"","type":"character","sortable":false,"filterable":false},{"accessor":"IndCode","name":"IndCode","type":"character"},{"accessor":"Low","name":"Low","type":"numeric"},{"accessor":"High","name":"High","type":"numeric"},{"accessor":"TreatSpec","name":"TreatSpec","type":"character"},{"accessor":"Treatment","name":"Treatment","type":"character"}],"defaultPageSize":10,"paginationType":"numbers","showPageInfo":true,"minRows":1,"dataKey":"ead0d1ef76747ff9ea51f0fee9a364af","key":"ead0d1ef76747ff9ea51f0fee9a364af"},"children":[]},"class":"reactR_markup"},"evals":[],"jsHooks":[]}</script> ] ??? Notes - --- ## Visualise effect of data treatment .left-column[ Effect of the Winsorisation can be plotted using box plots or violin plots. ```r COINr::iplotIndDist2(ASEM, dsets = c("Denominated", "Treated"), icodes = "Services", ptype = "Scatter") ``` ] .right-column[ <div id="htmlwidget-de6e874a84f330101707" style="width:600px;height:370.8px;" class="plotly html-widget"></div> <script type="application/json" data-for="htmlwidget-de6e874a84f330101707">{"x":{"visdat":{"7fa15bcf3359":["function () ","plotlyVisDat"]},"cur_data":"7fa15bcf3359","attrs":{"7fa15bcf3359":{"mode":"markers","alpha_stroke":1,"sizes":[10,100],"spans":[1,20],"type":"scatter"},"7fa15bcf3359.1":{"mode":"markers","alpha_stroke":1,"sizes":[10,100],"spans":[1,20],"type":"scatter","x":{},"y":{},"text":["Austria","Belgium","Bulgaria","Croatia","Cyprus","Czech Republic","Denmark","Estonia","Finland","France","Germany","Greece","Hungary","Ireland","Italy","Latvia","Lithuania","Luxembourg","Malta","Netherlands","Norway","Poland","Portugal","Romania","Slovakia","Slovenia","Spain","Sweden","Switzerland","United Kingdom","Australia","Bangladesh","Brunei Darussalam","Cambodia","China","India","Indonesia","Japan","Kazakhstan","Korea","Lao PDR","Malaysia","Mongolia","Myanmar","New Zealand","Pakistan","Philippines","Russian Federation","Singapore","Thailand","Vietnam"],"hoverinfo":"text","marker":{"size":15},"showlegend":false,"inherit":true}},"layout":{"margin":{"b":40,"l":60,"t":25,"r":10},"xaxis":{"domain":[0,1],"automargin":true,"title":"Trade in services <br> (Million USD)"},"yaxis":{"domain":[0,1],"automargin":true,"title":"Trade in services <br> (Million USD)"},"hovermode":"closest","showlegend":false},"source":"A","config":{"modeBarButtonsToAdd":["hoverclosest","hovercompare"],"showSendToCloud":false},"data":[{"mode":"markers","type":"scatter","marker":{"color":"rgba(31,119,180,1)","line":{"color":"rgba(31,119,180,1)"}},"error_y":{"color":"rgba(31,119,180,1)"},"error_x":{"color":"rgba(31,119,180,1)"},"line":{"color":"rgba(31,119,180,1)"},"xaxis":"x","yaxis":"y","frame":null},{"mode":"markers","type":"scatter","x":[0.276681940794445,0.462254867226653,0.243742052901564,0.338794072256007,0.760098867666084,0.222543207322299,0.370398919453455,0.438248128135714,0.225605264893292,0.191158749982344,0.166319805540168,0.198135393098302,0.310924849651287,1.10917783352835,0.108956030826193,0.263149434041176,0.288904481310413,2.82768641582263,1.82464239937715,0.445881137056196,0.224642950519038,0.175530424570937,0.213728847524469,0.166318536379482,0.180966399813671,0.263728015550315,0.15938355214756,0.256778035747555,0.310029600268569,0.195712022067552,0.0833020877198363,0.0456396445810669,0.185490394414346,0.289391165863444,0.058574838537451,0.13023397074421,0.0579954816204536,0.0711933183269379,0.124883704482977,0.142319736141744,0.0872184959616381,0.245944958634483,0.243897222607497,0.0949810719669238,0.141592261176336,0.0389460389950171,0.181091173647035,0.0983541886685242,1.02616844245993,0.265365886247069,0.148738354805822],"y":[0.276681940794445,0.462254867226653,0.243742052901564,0.338794072256007,0.760098867666084,0.222543207322299,0.370398919453455,0.438248128135714,0.225605264893292,0.191158749982344,0.166319805540168,0.198135393098302,0.310924849651287,0.760098867666084,0.108956030826193,0.263149434041176,0.288904481310413,0.760098867666084,0.760098867666084,0.445881137056196,0.224642950519038,0.175530424570937,0.213728847524469,0.166318536379482,0.180966399813671,0.263728015550315,0.15938355214756,0.256778035747555,0.310029600268569,0.195712022067552,0.0833020877198363,0.0456396445810669,0.185490394414346,0.289391165863444,0.058574838537451,0.13023397074421,0.0579954816204536,0.0711933183269379,0.124883704482977,0.142319736141744,0.0872184959616381,0.245944958634483,0.243897222607497,0.0949810719669238,0.141592261176336,0.0389460389950171,0.181091173647035,0.0983541886685242,0.760098867666084,0.265365886247069,0.148738354805822],"text":["Austria","Belgium","Bulgaria","Croatia","Cyprus","Czech Republic","Denmark","Estonia","Finland","France","Germany","Greece","Hungary","Ireland","Italy","Latvia","Lithuania","Luxembourg","Malta","Netherlands","Norway","Poland","Portugal","Romania","Slovakia","Slovenia","Spain","Sweden","Switzerland","United Kingdom","Australia","Bangladesh","Brunei Darussalam","Cambodia","China","India","Indonesia","Japan","Kazakhstan","Korea","Lao PDR","Malaysia","Mongolia","Myanmar","New Zealand","Pakistan","Philippines","Russian Federation","Singapore","Thailand","Vietnam"],"hoverinfo":["text","text","text","text","text","text","text","text","text","text","text","text","text","text","text","text","text","text","text","text","text","text","text","text","text","text","text","text","text","text","text","text","text","text","text","text","text","text","text","text","text","text","text","text","text","text","text","text","text","text","text"],"marker":{"color":"rgba(255,127,14,1)","size":15,"line":{"color":"rgba(255,127,14,1)"}},"showlegend":false,"error_y":{"color":"rgba(255,127,14,1)"},"error_x":{"color":"rgba(255,127,14,1)"},"line":{"color":"rgba(255,127,14,1)"},"xaxis":"x","yaxis":"y","frame":null}],"highlight":{"on":"plotly_click","persistent":false,"dynamic":false,"selectize":false,"opacityDim":0.2,"selected":{"opacity":1},"debounce":0},"shinyEvents":["plotly_hover","plotly_click","plotly_selected","plotly_relayout","plotly_brushed","plotly_brushing","plotly_clickannotation","plotly_doubleclick","plotly_deselect","plotly_afterplot","plotly_sunburstclick"],"base_url":"https://plot.ly"},"evals":[],"jsHooks":[]}</script> ] ??? Notes -It is also a good idea to visualise and compare the treated data against the untreated data. The best way to do this interactively is to call `indDash()` again, which allows comparison of treated and untreated indicators side by side. --- ## Normalise the data .left-column[ Normalisation is the operation of bringing indicators onto comparable scales so that they can be aggregated more fairly. This is done by setting `ntype` ```r ASEM <- normalise(ASEM, dset = "Treated", ntype = "minmax", npara = list(minmax = c(0,100))) ``` ] .right-column[ * `minmax` min-max transformation that scales each indicator onto an interval specified by `npara`, e.g. if `npara$minmax = c(0,10)` the indicators will scale to [0, 10]. * `zscore` scales the indicator to have a mean and standard deviation specified by `npara`, e.g. if `npara$zscore = c(0,1)` the indicator will have mean zero and standard deviation 1. * `scaled` is a general linear transformation defined by `npara$scaled = c(a,b)` which subtracts `a` and divides by `b`. * `goalposts` is a capped linear transformation with `npara$goalposts = c(l, u, a)`, where `l` is the lower bound, `u` is the upper bound, and `a` is a scaling parameter. * `rank` replaces indicator scores with their corresponding ranks, such that the highest scores have the largest rank values. Ties take average rank values. Here `npara` is not used. * `borda` is similar to `rank` but uses Borda scores, which are simply rank values minus one. * `prank` gives percentile ranks. * `fracmax` scales each indicator by dividing the value by the maximum value of the indicator. * `dist2ref` gives the distance to a reference unit, defined by `npara`, `dist2max` gives the normalised distance to the maximum of each indicator and `dist2targ` gives the normalised distance to indicator targets. Any scores that exceed the target will be capped at a normalised value of one. ] ??? Notes -Again, we could visualise and check stats here but to keep things shorter we'll skip that for now. * `custom` allows to pass a custom function to apply to every indicator. For example, `npara$custom = function(x) {x/max(x, na.rm = T)}` would give the "fracmax" normalisation method described above. * `none` the indicator is not normalised (this is mainly useful for adjustments and sensitivity analysis). --- ## Equal weighting does not mean equal importance > "_If strategic decisions require a consideration of the relative weight / importance of each domain, this should occur during the joint analysis stage, using the domain-specific outputs of the comparison tool as a guide. If variable domain-weighting within the model itself is absolutely essential, it could be adjusted in individual circumstances._" [IASC, 2015](https://www.humanitarianresponse.info/sites/www.humanitarianresponse.info/files/documents/files/Comparison%20Tool%20Guidance%202015%20FINAL-EN.pdf#page=12) Weights (defined in `agweights`) do need to be carefully considered, taking into account at least the relative conceptual importance of indicators, the statistical implications of the value selected for each indicator weights. All this needs to be communicated to the index end users. Weights are defined manually within the orginal `IndMeta` & `AggMeta` and can be manually updated at any stage. ```r # put new weights in the COIN #ASEM$Parameters$Weights$NewWeights <- NewWeights # Aggregate again to get new results # ASEM <- aggregate(ASEM, # set = "Normalised", # agweights = "NewWeights") ``` The [Compind](https://rdrr.io/cran/Compind/) packages provided a series of methods. ??? Notes - Question the IASC statement "The equal weighting of domains has been implemented deliberately to prevent potentially long and fruitless discussions which could limit the tool’s ability to achieve consensus. " See Compind tutorial https://humanitarian-user-group.github.io/post/compositeindicator/ --- ## Aggregation of all indicators: Compensability .left-column[ Aggregation type can be done at multiple stage and through the `agtype` setting ```r ASEM <- aggregate(ASEM, agtype = "arith_mean", dset = "Normalised") ``` ] .right-column[ Deciding on the aggregation method reflects assumption in terms of compensability between indicators: * `arith_mean`: **weighted arithmetic mean** or additive combination may be perceived as easy-to-interpret but implies full compensability. * `geom_mean` the **weighted geometric mean** uses the product of the indicators rather than the sum and prevent compensability * `harm_mean` **weighted harmonic mean**, also called [Pythagorean means](https://en.wikipedia.org/wiki/Pythagorean_means) is the the least compensatory of the the three means, and is often used when making the mean of rates and ratios. * `median` The *weighted median* is defined by ordering indicator values, then picking the value which has half of the assigned weight above it, and half below it. * `copeland` The [Copeland method](https://en.wikipedia.org/wiki/Copeland%27s_method) is based pairwise comparisons between units, calculated from an *outranking matrix* ] ??? Notes - --- class: inverse, left, middle # Stage 2. Question the assumptions * Correlations between indicators, with parents pillars, within and between pillars * Internal Consistency * Principle component * Effect of specific formulation * Sensitivity Analysis ??? correlation structure between the underlying indicators and its effect on the overall score (i.e., the CI). Ideally, there should be positive correlations between the indicators as this indicates that individual variables are linked to an overarching concept --- ## Reconstruct the index from raw data .pull-left[ to check differences, Check calculations for pre-aggregated data to make sure that they are correct. If you only have normalised and aggregated data, you can still at least check the aggregation stage as follows. Assuming that the indicator columns in your pre-aggregated data are normalised, we can first manually create a normalised data set: ] -- .pull-right[ ```r # extract aggregated data set (as a data frame) Aggregated_Data <- ASEM$Data$Aggregated # assemble new COIN only including pre-aggregated data ASEM_preagg <- assemble(IndData = Aggregated_Data, IndMeta = ASEMIndMeta, AggMeta = ASEMAggMeta, preagg = TRUE) ``` ```r ASEM_preagg$Data$Normalised <- ASEM_preagg$Data$PreAggregated %>% select(!ASEM$Input$AggMeta$Code) ``` Here we have just copied the pre-aggregated data, but removed any aggregation columns. Next, we can aggregate these columns using COINr. ```r ASEM_preagg <- aggregate(ASEM_preagg, dset = "Normalised", agtype = "arith_mean") # check data set names names(ASEM_preagg$Data) ``` ``` ## [1] "PreAggregated" "Normalised" "Aggregated" ``` ] ??? Notes - COINr will check that the column names in the indicator data correspond to the codes supplied in the `IndMeta` and `AggMeta`. This means that these two latter data frames still need to be supplied. However, from this point the COIN functions as any other, although consider that it cannot be regenerated (the methodology to arrive at the pre-aggregated data is unknown), and the only data set present is the "PreAggregated" data. --- ## Check whether data frames are the same .pull-left[ Double-checking calculations is tedious but in the process you often learn a lot. ] -- .pull-right[ ```r all_equal(ASEM_preagg$Data$PreAggregated, ASEM_preagg$Data$Aggregated) ``` ``` ## [1] TRUE ``` ] ??? Notes - As expected, here the results are the same. If the results are *not* the same, `all_equal()` will give some information about the differences. If you reconstruct the index from raw data, and you find differences, a few points are worth considering: 1. The difference could be due to an error in the pre-aggregated data, or even a bug in COINr. If you suspect the latter please open an issue on the repo. 2. If you have used data treatment or imputation, differences can easily arise. One reason is that some things are possible to calculate in different ways. COINr uses certain choices, but other choices are also valid. Examples of this include: - Skew and kurtosis (underlying data treatment) - see e.g. `?e1071::skewness` - Correlation and treatment of missing values - see `?cor` - Ranks and how to handle ties - see `?rank` 3. Errors can also arise from how you entered the data. Worth re-checking all that as well. --- ## Revise internal consistency Cronbach's alpha calculation should be done for any group of indicators ```r # all indicators getCronbach(ASEM, dset = "Normalised") ``` ``` ## [1] 0.9005396 ``` ```r # indicators in connectivity sub-index getCronbach(ASEM, dset = "Normalised", icodes = "Conn", aglev = 1) ``` ``` ## [1] 0.8799702 ``` ```r # indicators in sustainability sub-index getCronbach(ASEM, dset = "Normalised", icodes = "Sust", aglev = 1) ``` ``` ## [1] 0.6842895 ``` ```r # pillars in connectivity sub-index getCronbach(ASEM, dset = "Aggregated", icodes = "Conn", aglev = 2) ``` ``` ## [1] 0.7737003 ``` ```r # pillars in sustainability sub-index getCronbach(ASEM, dset = "Aggregated", icodes = "Sust", aglev = 2) ``` ``` ## [1] -0.3461489 ``` ??? Notes -Recall here that because we have plotted a "Raw" indicator against the index, and this is a negative indicator, its direction is flipped. Meaning that in this plot there is a positive correlation, but plotting the normalised indicator against the index would show a negative correlation. --- ## Correlation analysis on the normalised data .left-column[ As indicators have had their directions reversed where appropriate, how the consistency of the sustainability pillars is then affected? ```r COINr::plotCorr(ASEM, dset = "Aggregated", icodes = "Sust", aglevs = 2, pval = 0) ``` ] -- .right-column[ <img src="TrainingSeverityIndex_files/figure-html/unnamed-chunk-19-1.png" width="1200" /> ] ??? Notes - Sustainability dimensions are not well-correlated and are in fact slightly negatively correlated. This points to trade-offs between different aspects of sustainable development: as social sustainability increases, environmental sustainability often tends to decrease. Or at best, an increase in one does not really imply an increase in the others. --- ## Principle component analysis This can be done only on data without missing value ```r # impute one ASEM2 <- impute(ASEM, dset = "Denominated", imtype = "indgroup_mean", groupvar = "Group_GDP") ASEM2 <- normalise(ASEM2, dset = "Treated", ntype = "minmax", npara = list(minmax = c(0,100))) # try here at the indicator level, let's say within one of the pillar groups: PCA_P2P <- getPCA(ASEM2, dset = "Normalised", icodes = "P2P", aglev = 1, out2 = "list") summary(PCA_P2P$PCAresults$P2P$PCAres) ``` ``` ## Importance of components: ## PC1 PC2 PC3 PC4 PC5 PC6 PC7 ## Standard deviation 1.9251 1.0797 0.9485 0.86979 0.77992 0.63344 0.49754 ## Proportion of Variance 0.4633 0.1457 0.1125 0.09457 0.07603 0.05016 0.03094 ## Cumulative Proportion 0.4633 0.6090 0.7214 0.81600 0.89203 0.94219 0.97313 ## PC8 ## Standard deviation 0.46362 ## Proportion of Variance 0.02687 ## Cumulative Proportion 1.00000 ``` ```r # ggbiplot(PCA_P2P$PCAresults$P2P$PCAres, # labels = ASEM$Data$Normalised$UnitCode, # groups = ASEM$Data$Normalised$Group_EurAsia) ``` ??? Notes - We can see that the first principle component explains about 50% of the variance of the indicators, which is perhaps borderline for the existence of single latent variable. That said, many composite indicators will not yield strong latent variables in many cases. We can now produce a PCA biplot using this information. Once again we see a fairly clear divide between Asia and Europe in terms of P2P connectivity, with exceptions of Singapore which is very well-connected. We also note the small cluster of New Zealand and Australia which have very similar characteristics in P2P connectivity. --- ## Understand the effective weights of each indicator .left-column[ The weight of an indicator in the final index is due to its own weight, plus the weight of all its parents, as well as the number of indicators and aggregates in each group. ```r # `effectiveWeight()` gives effective weights for all levels. We can check the indicator level by filtering: EffWts <- effectiveWeight(ASEM) COINr::plotframework(ASEM) ``` ] .right-column[ <div id="htmlwidget-f16367b8d1573a6a7fae" style="width:900px;height:556.2px;" class="plotly html-widget"></div> <script type="application/json" data-for="htmlwidget-f16367b8d1573a6a7fae">{"x":{"visdat":{"54dd26026ec4":["function () ","plotlyVisDat"]},"cur_data":"54dd26026ec4","attrs":{"54dd26026ec4":{"labels":["Goods","Services","FDI","PRemit","ForPort","CostImpEx","Tariff","TBTs","TIRcon","RTAs","Visa","StMob","Research","Pat","CultServ","CultGood","Tourist","MigStock","Lang","LPI","Flights","Ship","Bord","Elec","Gas","ConSpeed","Cov4G","Embs","IGOs","UNVote","Renew","PrimEner","CO2","MatCon","Forest","Poverty","Palma","TertGrad","FreePress","TolMin","NGOs","CPI","FemLab","WomParl","PubDebt","PrivDebt","GDPGrow","RDExp","NEET","ConEcFin","Instit","P2P","Physical","Political","Environ","Social","SusEcFin","Conn","Sust","Index"],"parents":["ConEcFin","ConEcFin","ConEcFin","ConEcFin","ConEcFin","Instit","Instit","Instit","Instit","Instit","Instit","P2P","P2P","P2P","P2P","P2P","P2P","P2P","P2P","Physical","Physical","Physical","Physical","Physical","Physical","Physical","Physical","Political","Political","Political","Environ","Environ","Environ","Environ","Environ","Social","Social","Social","Social","Social","Social","Social","Social","Social","SusEcFin","SusEcFin","SusEcFin","SusEcFin","SusEcFin","Conn","Conn","Conn","Conn","Conn","Sust","Sust","Sust","Index","Index",""],"values":[0.02,0.02,0.02,0.02,0.02,0.0166666666666667,0.0166666666666667,0.0166666666666667,0.0166666666666667,0.0166666666666667,0.0166666666666667,0.0125,0.0125,0.0125,0.0125,0.0125,0.0125,0.0125,0.0125,0.0125,0.0125,0.0125,0.0125,0.0125,0.0125,0.0125,0.0125,0.0333333333333333,0.0333333333333333,0.0333333333333333,0.0333333333333333,0.0333333333333333,0.0333333333333333,0.0333333333333333,0.0333333333333333,0.0185185185185185,0.0185185185185185,0.0185185185185185,0.0185185185185185,0.0185185185185185,0.0185185185185185,0.0185185185185185,0.0185185185185185,0.0185185185185185,0.0333333333333333,0.0333333333333333,0.0333333333333333,0.0333333333333333,0.0333333333333333,0.1,0.1,0.1,0.1,0.1,0.166666666666667,0.166666666666667,0.166666666666667,0.5,0.5,1],"branchvalues":"total","alpha_stroke":1,"sizes":[10,100],"spans":[1,20],"type":"sunburst"}},"layout":{"margin":{"b":40,"l":60,"t":25,"r":10},"hovermode":"closest","showlegend":false},"source":"A","config":{"modeBarButtonsToAdd":["hoverclosest","hovercompare"],"showSendToCloud":false},"data":[{"labels":["Goods","Services","FDI","PRemit","ForPort","CostImpEx","Tariff","TBTs","TIRcon","RTAs","Visa","StMob","Research","Pat","CultServ","CultGood","Tourist","MigStock","Lang","LPI","Flights","Ship","Bord","Elec","Gas","ConSpeed","Cov4G","Embs","IGOs","UNVote","Renew","PrimEner","CO2","MatCon","Forest","Poverty","Palma","TertGrad","FreePress","TolMin","NGOs","CPI","FemLab","WomParl","PubDebt","PrivDebt","GDPGrow","RDExp","NEET","ConEcFin","Instit","P2P","Physical","Political","Environ","Social","SusEcFin","Conn","Sust","Index"],"parents":["ConEcFin","ConEcFin","ConEcFin","ConEcFin","ConEcFin","Instit","Instit","Instit","Instit","Instit","Instit","P2P","P2P","P2P","P2P","P2P","P2P","P2P","P2P","Physical","Physical","Physical","Physical","Physical","Physical","Physical","Physical","Political","Political","Political","Environ","Environ","Environ","Environ","Environ","Social","Social","Social","Social","Social","Social","Social","Social","Social","SusEcFin","SusEcFin","SusEcFin","SusEcFin","SusEcFin","Conn","Conn","Conn","Conn","Conn","Sust","Sust","Sust","Index","Index",""],"values":[0.02,0.02,0.02,0.02,0.02,0.0166666666666667,0.0166666666666667,0.0166666666666667,0.0166666666666667,0.0166666666666667,0.0166666666666667,0.0125,0.0125,0.0125,0.0125,0.0125,0.0125,0.0125,0.0125,0.0125,0.0125,0.0125,0.0125,0.0125,0.0125,0.0125,0.0125,0.0333333333333333,0.0333333333333333,0.0333333333333333,0.0333333333333333,0.0333333333333333,0.0333333333333333,0.0333333333333333,0.0333333333333333,0.0185185185185185,0.0185185185185185,0.0185185185185185,0.0185185185185185,0.0185185185185185,0.0185185185185185,0.0185185185185185,0.0185185185185185,0.0185185185185185,0.0333333333333333,0.0333333333333333,0.0333333333333333,0.0333333333333333,0.0333333333333333,0.1,0.1,0.1,0.1,0.1,0.166666666666667,0.166666666666667,0.166666666666667,0.5,0.5,1],"branchvalues":"total","type":"sunburst","marker":{"color":"rgba(31,119,180,1)","line":{"color":"rgba(255,255,255,1)"}},"frame":null}],"highlight":{"on":"plotly_click","persistent":false,"dynamic":false,"selectize":false,"opacityDim":0.2,"selected":{"opacity":1},"debounce":0},"shinyEvents":["plotly_hover","plotly_click","plotly_selected","plotly_relayout","plotly_brushed","plotly_brushing","plotly_clickannotation","plotly_doubleclick","plotly_deselect","plotly_afterplot","plotly_sunburstclick"],"base_url":"https://plot.ly"},"evals":[],"jsHooks":[]}</script> ] ??? Notes - A sometimes under-appreciated fact is that .... For example, an equally-weighted group of two indicators will each have a higher weight (0.5) than an equally weighted group of ten indicators (0.1), and this applies to all aggregation levels. --- ## What-ifs: Effect of formulation on scores & ranks Examples could include different weights, as well as adding/removing/substituting indicators or entire aggregation groups ```r # Copy the COIN ASEM_NoPolitical <- ASEM # Copy the weights ASEM_NoPolitical$Parameters$Weights$NoPolitical <- ASEM_NoPolitical$Parameters$Weights$Original # Set Political weight to zero ASEM_NoPolitical$Parameters$Weights$NoPolitical$Weight[ ASEM_NoPolitical$Parameters$Weights$NoPolitical$Code == "Political"] <- 0 # Alter methodology to use new weights ASEM_NoPolitical$Method$aggregate$agweights <- "NoPolitical" # Regenerate ASEM_NoPolitical <- regen(ASEM_NoPolitical) ``` Now we need to compare the two alternative indexes: ```r COINr::compTable(ASEM, ASEM_NoPolitical, dset = "Aggregated", isel = "Index", COINnames = c("Original", "NoPolitical")) ``` ``` ## UnitCode UnitName Rank: Original Rank: NoPolitical RankChange ## 6 BRN Brunei Darussalam 38 45 -7 ## 14 EST Estonia 21 28 -7 ## 13 ESP Spain 24 18 6 ## 44 ROU Romania 25 20 5 ## 9 CYP Cyprus 29 33 -4 ## 21 IDN Indonesia 44 40 4 ## 8 CHN China 49 46 3 ## 19 HRV Croatia 18 21 -3 ## 20 HUN Hungary 20 23 -3 ## 25 JPN Japan 34 31 3 ## 31 LUX Luxembourg 8 11 -3 ## 33 MLT Malta 12 15 -3 ## 50 THA Thailand 41 38 3 ## 10 CZE Czech Republic 17 19 -2 ## 11 DEU Germany 9 7 2 ## 12 DNK Denmark 3 5 -2 ## 15 FIN Finland 14 12 2 ## 16 FRA France 19 17 2 ## 23 IRL Ireland 11 9 2 ## 26 KAZ Kazakhstan 47 49 -2 ## 28 KOR Korea 31 29 2 ## 29 LAO Lao PDR 45 47 -2 ## 32 LVA Latvia 23 25 -2 ## 35 MNG Mongolia 46 44 2 ## 42 POL Poland 26 24 2 ## 1 AUS Australia 35 36 -1 ## 2 AUT Austria 7 8 -1 ## 3 BEL Belgium 5 4 1 ## 17 GBR United Kingdom 15 14 1 ## 24 ITA Italy 28 27 1 ## 36 MYS Malaysia 40 41 -1 ## 38 NOR Norway 4 3 1 ## 39 NZL New Zealand 33 34 -1 ## 43 PRT Portugal 27 26 1 ## 51 VNM Vietnam 36 35 1 ## 4 BGD Bangladesh 48 48 0 ## 5 BGR Bulgaria 30 30 0 ## 7 CHE Switzerland 1 1 0 ## 18 GRC Greece 32 32 0 ## 22 IND India 42 42 0 ## 27 KHM Cambodia 37 37 0 ## 30 LTU Lithuania 16 16 0 ## 34 MMR Myanmar 43 43 0 ## 37 NLD Netherlands 2 2 0 ## 40 PAK Pakistan 50 50 0 ## 41 PHL Philippines 39 39 0 ## 45 RUS Russian Federation 51 51 0 ## 46 SGP Singapore 13 13 0 ## 47 SVK Slovakia 22 22 0 ## 48 SVN Slovenia 10 10 0 ## 49 SWE Sweden 6 6 0 ## AbsRankChange ## 6 7 ## 14 7 ## 13 6 ## 44 5 ## 9 4 ## 21 4 ## 8 3 ## 19 3 ## 20 3 ## 25 3 ## 31 3 ## 33 3 ## 50 3 ## 10 2 ## 11 2 ## 12 2 ## 15 2 ## 16 2 ## 23 2 ## 26 2 ## 28 2 ## 29 2 ## 32 2 ## 35 2 ## 42 2 ## 1 1 ## 2 1 ## 3 1 ## 17 1 ## 24 1 ## 36 1 ## 38 1 ## 39 1 ## 43 1 ## 51 1 ## 4 0 ## 5 0 ## 7 0 ## 18 0 ## 22 0 ## 27 0 ## 30 0 ## 34 0 ## 37 0 ## 40 0 ## 41 0 ## 45 0 ## 46 0 ## 47 0 ## 48 0 ## 49 0 ``` ??? Notes - 1. Use the "exclude" argument of `assemble()` to exclude the relevant indicators 2. Set the weight of the Political pillar to zero 3. Remove the indicators manually Here we will take the quickest option, which is Option 2. The results show that the rank changes are not major at the index level, with a maximum shift of six places for Estonia. The implication might be (depending on context) that the inclusion or not of the Political pillar does not have a drastic impact on the results, although one should bear in mind that the changes on lower aggregation levels are probably higher, and the indicators themselves may have value and add legitimacy to the framework. In other words, it is not always just the index that counts. --- ## Sensitivity analysis: test the overall effect of uncertainties ??? Notes - *Sensitivity analysis* can help to quantify the uncertainty in the scores and rankings of the composite indicator, and to identify which assumptions are driving this uncertainty, and which are less important. To perform an uncertainty or sensitivity analysis, one must define several things: 1. The system or model (in this case it is a composite indicator, represented as a COIN) 2. Which assumptions to treat as uncertain 3. The alternative values or distributions assigned to each uncertain assumption 4. Which output or outputs to target (i.e. to calculate confidence intervals for) 5. Methodological specifications for the sensitivity analysis itself, for example the method and the number of replications to run. --- class: inverse, left, middle # Stage 3. Visualize Results * Index * SubDimensions * Maps * Summary * Export --- ## Index & Sub Dimensions <div id="htmlwidget-8ed2b5a702238cbaf02f" style="width:900px;height:556.2px;" class="plotly html-widget"></div> <script type="application/json" data-for="htmlwidget-8ed2b5a702238cbaf02f">{"x":{"visdat":{"54dd799ce087":["function () ","plotlyVisDat"]},"cur_data":"54dd799ce087","attrs":{"54dd799ce087":{"x":{},"y":{},"key":{},"name":"Conn","alpha_stroke":1,"sizes":[10,100],"spans":[1,20],"type":"bar"},"54dd799ce087.1":{"x":{},"y":[74.4548456167802,68.3903994276055,72.984587047517,71.7788274306318,64.2341742396999,72.5749070754546,69.2985693672551,56.81833742418,67.9098328992129,68.9716674007193,65.7926327143908,60.9637968255754,57.6991892825095,66.1023604829868,63.6728088969191,66.4772657543424,61.6532131838662,62.0649972175479,60.8678822272939,59.2814319235455,57.2132026799891,59.9131040908634,62.3059670915951,60.6274184659754,65.6537370943831,60.3401006954177,59.0664869695629,56.8603194771632,51.5105979290639,57.1575466581957,57.2995489799116,54.3895698370399,59.5233375387543,58.0209235615006,53.554075246916,55.6165057791429,55.994246771221,40.1032109709557,56.3193877339437,41.1227817399095,50.2887979564375,51.7239816595384,59.665454111438,52.2947665758468,56.2332312514876,48.0624863031439,47.1567805729016,60.8698552648875,48.2003027304709,53.6533367376402,41.476226644861],"key":{},"name":"Sust","alpha_stroke":1,"sizes":[10,100],"spans":[1,20],"type":"bar","inherit":true}},"layout":{"margin":{"b":40,"l":60,"t":25,"r":10},"title":{"text":"Sustainable Connectivity","y":0.95},"yaxis":{"domain":[0,1],"automargin":true,"title":"","showticklabels":false},"xaxis":{"domain":[0,1],"automargin":true,"title":"","categoryorder":"array","categoryarray":{"Index":[68.621403668364,65.1651358926319,65.0410383080279,64.6071663752533,64.1121692355648,63.224823201446,62.3642937416572,61.6868684841489,61.1295968310016,60.7497051038254,60.5137694239751,60.2709945407649,59.8030710567783,59.1189300300464,58.2416267711836,57.2939257480353,56.8523358014573,56.1034368331307,55.8882429293819,55.7937082339417,55.3573637246148,55.1587365396442,55.146816044091,55.0829063362119,54.7648797130732,54.2959872906309,54.0784920389337,52.3019881561583,51.6452608367962,50.9378852194357,50.4324063148894,49.8521681997249,49.3797777604972,47.4949280469203,45.959441786501,43.2854587144294,43.2017559236092,41.7270225080216,41.7237550660752,41.7198537081406,41.1580879386198,40.1595694805621,40.1268409828404,40.0841372709449,39.7607259845468,39.4695646071414,39.0616577624139,38.9191348380666,38.2199505267257,37.806472915641,35.731036156064]},"type":"category"},"barmode":"stack","hovermode":"closest","showlegend":true},"source":"barclick","config":{"modeBarButtonsToAdd":["hoverclosest","hovercompare"],"showSendToCloud":false},"data":[{"x":["CHE","NLD","DNK","NOR","BEL","SWE","AUT","LUX","DEU","SVN","IRL","MLT","SGP","FIN","GBR","LTU","CZE","HRV","FRA","HUN","EST","SVK","LVA","ESP","ROU","POL","PRT","ITA","CYP","BGR","KOR","GRC","NZL","JPN","AUS","VNM","KHM","BRN","PHL","MYS","THA","IND","MMR","IDN","LAO","MNG","KAZ","BGD","CHN","PAK","RUS"],"y":[62.7879617199478,61.9398723576584,57.0974895685389,57.4355053198748,63.9901642314296,53.8747393274374,55.4300181160593,66.5553995441179,54.3493607627902,52.5277428069315,55.2349061335593,59.5781922559545,61.9069528310472,52.1354995771061,52.8104446454481,48.1105857417282,52.0514584190484,50.1418764487134,50.9086036314699,52.3059845443379,53.5015247692406,50.404368988425,47.9876649965869,49.5383942064484,43.8760223317633,48.2518738858441,49.0904971083045,47.7436568351534,51.7799237445284,44.7182237806758,43.5652636498673,45.3147665624098,39.2362179822402,36.96893253234,38.3648083260859,30.954411649716,30.4092650759974,43.3508340450876,27.1281223982067,42.3169256763717,32.027377920802,28.5951573015857,20.5882278542428,27.873507966043,23.288220717606,30.8766429111389,30.9665349519263,16.9684144112457,28.2395983229804,21.9596090936419,29.985845667267],"key":["CHE","NLD","DNK","NOR","BEL","SWE","AUT","LUX","DEU","SVN","IRL","MLT","SGP","FIN","GBR","LTU","CZE","HRV","FRA","HUN","EST","SVK","LVA","ESP","ROU","POL","PRT","ITA","CYP","BGR","KOR","GRC","NZL","JPN","AUS","VNM","KHM","BRN","PHL","MYS","THA","IND","MMR","IDN","LAO","MNG","KAZ","BGD","CHN","PAK","RUS"],"name":"Conn","type":"bar","marker":{"color":"rgba(31,119,180,1)","line":{"color":"rgba(31,119,180,1)"}},"error_y":{"color":"rgba(31,119,180,1)"},"error_x":{"color":"rgba(31,119,180,1)"},"xaxis":"x","yaxis":"y","_isNestedKey":false,"frame":null},{"x":["CHE","NLD","DNK","NOR","BEL","SWE","AUT","LUX","DEU","SVN","IRL","MLT","SGP","FIN","GBR","LTU","CZE","HRV","FRA","HUN","EST","SVK","LVA","ESP","ROU","POL","PRT","ITA","CYP","BGR","KOR","GRC","NZL","JPN","AUS","VNM","KHM","BRN","PHL","MYS","THA","IND","MMR","IDN","LAO","MNG","KAZ","BGD","CHN","PAK","RUS"],"y":[74.4548456167802,68.3903994276055,72.984587047517,71.7788274306318,64.2341742396999,72.5749070754546,69.2985693672551,56.81833742418,67.9098328992129,68.9716674007193,65.7926327143908,60.9637968255754,57.6991892825095,66.1023604829868,63.6728088969191,66.4772657543424,61.6532131838662,62.0649972175479,60.8678822272939,59.2814319235455,57.2132026799891,59.9131040908634,62.3059670915951,60.6274184659754,65.6537370943831,60.3401006954177,59.0664869695629,56.8603194771632,51.5105979290639,57.1575466581957,57.2995489799116,54.3895698370399,59.5233375387543,58.0209235615006,53.554075246916,55.6165057791429,55.994246771221,40.1032109709557,56.3193877339437,41.1227817399095,50.2887979564375,51.7239816595384,59.665454111438,52.2947665758468,56.2332312514876,48.0624863031439,47.1567805729016,60.8698552648875,48.2003027304709,53.6533367376402,41.476226644861],"key":["CHE","NLD","DNK","NOR","BEL","SWE","AUT","LUX","DEU","SVN","IRL","MLT","SGP","FIN","GBR","LTU","CZE","HRV","FRA","HUN","EST","SVK","LVA","ESP","ROU","POL","PRT","ITA","CYP","BGR","KOR","GRC","NZL","JPN","AUS","VNM","KHM","BRN","PHL","MYS","THA","IND","MMR","IDN","LAO","MNG","KAZ","BGD","CHN","PAK","RUS"],"name":"Sust","type":"bar","marker":{"color":"rgba(255,127,14,1)","line":{"color":"rgba(255,127,14,1)"}},"error_y":{"color":"rgba(255,127,14,1)"},"error_x":{"color":"rgba(255,127,14,1)"},"xaxis":"x","yaxis":"y","_isNestedKey":false,"frame":null}],"highlight":{"on":"plotly_click","persistent":false,"dynamic":false,"selectize":false,"opacityDim":0.2,"selected":{"opacity":1},"debounce":0},"shinyEvents":["plotly_hover","plotly_click","plotly_selected","plotly_relayout","plotly_brushed","plotly_brushing","plotly_clickannotation","plotly_doubleclick","plotly_deselect","plotly_afterplot","plotly_sunburstclick"],"base_url":"https://plot.ly"},"evals":[],"jsHooks":[]}</script> ??? Notes - --- ## Sub Indices Interactions ```r options(htmltools.dir.version = FALSE, htmltools.preserve.raw = FALSE, tibble.width = 60) COINr::iplotIndDist2(ASEM, dsets = "Aggregated", icodes = "Index") ``` <div id="htmlwidget-4d1c9ef950d2f363f8e9" style="width:2400px;height:1920px;" class="plotly html-widget"></div> <script type="application/json" data-for="htmlwidget-4d1c9ef950d2f363f8e9">{"x":{"visdat":{"54dd28d389a5":["function () ","plotlyVisDat"]},"cur_data":"54dd28d389a5","attrs":{"54dd28d389a5":{"mode":"markers","alpha_stroke":1,"sizes":[10,100],"spans":[1,20],"type":"scatter"},"54dd28d389a5.1":{"mode":"markers","alpha_stroke":1,"sizes":[10,100],"spans":[1,20],"type":"scatter","x":{},"y":{},"text":["Austria","Belgium","Bulgaria","Croatia","Cyprus","Czech Republic","Denmark","Estonia","Finland","France","Germany","Greece","Hungary","Ireland","Italy","Latvia","Lithuania","Luxembourg","Malta","Netherlands","Norway","Poland","Portugal","Romania","Slovakia","Slovenia","Spain","Sweden","Switzerland","United Kingdom","Australia","Bangladesh","Brunei Darussalam","Cambodia","China","India","Indonesia","Japan","Kazakhstan","Korea","Lao PDR","Malaysia","Mongolia","Myanmar","New Zealand","Pakistan","Philippines","Russian Federation","Singapore","Thailand","Vietnam"],"hoverinfo":"text","marker":{"size":15},"showlegend":false,"inherit":true}},"layout":{"margin":{"b":40,"l":60,"t":25,"r":10},"xaxis":{"domain":[0,1],"automargin":true,"title":["Trade in goods <br> ()","Trade in services <br> ()","Foreign direct investment <br> ()","Personal remittances (received and paid) <br> ()","Foreign portfolio investment liabilities and assets <br> ()","Cost to export/import <br> ()","Mean tariff rate <br> ()","Technical barriers to trade <br> ()","Signatory of TIR Convention <br> ()","Regional trade agreements <br> ()","Visa-free or visa-on-arrival <br> ()","International student mobility in tertiary education <br> ()","Research outputs with international collaborations <br> ()","Patents with foreign co-inventor <br> ()","Trade in cultural services <br> ()","Trade in cultural goods <br> ()","Tourist arrivals at national borders <br> ()","Migrant stock <br> ()","Common languages users <br> ()","Logistics Performance Index <br> ()","International flights passenger capacity <br> ()","Liner Shipping Connectivity Index <br> ()","Border crossings <br> ()","Trade in electricity <br> ()","Trade in gas <br> ()","Average connection speed <br> ()","Den_Pop covered by at least a 4G mobile network <br> ()","Embassies network <br> ()","Participation in international intergovernmental organisations <br> ()","UN voting alignment <br> ()","Renewable energy in total final energy consumption <br> ()","Primary energy use per GDP <br> ()","CO2 emissions per capita <br> ()","Domestic material consumption per capita <br> ()","Net forest loss <br> ()","Den_Pop living below the intenational poverty line <br> ()","Palma Index <br> ()","Tertiary graduates <br> ()","Freedom of the press <br> ()","Tolerance for minorities <br> ()","Presence of international non-governmental organisations <br> ()","Corruption Perceptions Index <br> ()","Female labour-force participation <br> ()","Women's participation in national parliaments <br> ()","Public debt as a percentage of GDP <br> ()","Private debt, loans and debt securities as percentage of GDP <br> ()","GDP per capita growth <br> ()","R&D expenditure as a percentage of GDP <br> ()","Proportion of youth not in education, employment or training <br> ()"]},"yaxis":{"domain":[0,1],"automargin":true,"title":["Trade in goods <br> ()","Trade in services <br> ()","Foreign direct investment <br> ()","Personal remittances (received and paid) <br> ()","Foreign portfolio investment liabilities and assets <br> ()","Cost to export/import <br> ()","Mean tariff rate <br> ()","Technical barriers to trade <br> ()","Signatory of TIR Convention <br> ()","Regional trade agreements <br> ()","Visa-free or visa-on-arrival <br> ()","International student mobility in tertiary education <br> ()","Research outputs with international collaborations <br> ()","Patents with foreign co-inventor <br> ()","Trade in cultural services <br> ()","Trade in cultural goods <br> ()","Tourist arrivals at national borders <br> ()","Migrant stock <br> ()","Common languages users <br> ()","Logistics Performance Index <br> ()","International flights passenger capacity <br> ()","Liner Shipping Connectivity Index <br> ()","Border crossings <br> ()","Trade in electricity <br> ()","Trade in gas <br> ()","Average connection speed <br> ()","Den_Pop covered by at least a 4G mobile network <br> ()","Embassies network <br> ()","Participation in international intergovernmental organisations <br> ()","UN voting alignment <br> ()","Renewable energy in total final energy consumption <br> ()","Primary energy use per GDP <br> ()","CO2 emissions per capita <br> ()","Domestic material consumption per capita <br> ()","Net forest loss <br> ()","Den_Pop living below the intenational poverty line <br> ()","Palma Index <br> ()","Tertiary graduates <br> ()","Freedom of the press <br> ()","Tolerance for minorities <br> ()","Presence of international non-governmental organisations <br> ()","Corruption Perceptions Index <br> ()","Female labour-force participation <br> ()","Women's participation in national parliaments <br> ()","Public debt as a percentage of GDP <br> ()","Private debt, loans and debt securities as percentage of GDP <br> ()","GDP per capita growth <br> ()","R&D expenditure as a percentage of GDP <br> ()","Proportion of youth not in education, employment or training <br> ()"]},"hovermode":"closest","showlegend":false},"source":"A","config":{"modeBarButtonsToAdd":["hoverclosest","hovercompare"],"showSendToCloud":false},"data":[{"mode":"markers","type":"scatter","marker":{"color":"rgba(31,119,180,1)","line":{"color":"rgba(31,119,180,1)"}},"error_y":{"color":"rgba(31,119,180,1)"},"error_x":{"color":"rgba(31,119,180,1)"},"line":{"color":"rgba(31,119,180,1)"},"xaxis":"x","yaxis":"y","frame":null},{"mode":"markers","type":"scatter","x":[40.7147978302105,80.0790829492294,47.1178543410879,29.6595542299126,21.5525492605235,88.8553124519079,24.3804882124031,75.3754890317444,20.7554972641161,15.0864687872643,29.5283169130779,11.5609768118045,87.2032124615804,23.0805774954351,15.7540188845476,62.5592560718532,59.6935324437879,27.8860822178772,75.179034652212,60.1300025526122,17.8605021462841,44.7279167208669,25.9424245175779,34.1151202706534,100,76.7590567226543,16.2628895134529,22.6777229358393,33.0443479319491,11.5808067789694,6.49608314755034,8.83072346888023,35.288121363973,63.2943152409557,1.73181451143796,0,7.70970515433294,1.42407266048311,19.4924394427823,19.1743392104261,30.5551357206689,56.3972144036942,39.8835633758377,24.7038370008743,8.99879169192228,2.25795082759758,18.1757885716913,10.3132031797815,88.2538120920766,39.4852273246478,82.3897744174304],"y":[32.966091561691,58.6989070002964,28.3984206626414,41.5789859430416,100,25.4588432615058,45.9615309377953,55.3699678161877,25.8834491770973,21.1068590367762,17.6625205478115,22.0742882471442,37.714448289341,100,9.70806589779169,31.0895813109849,34.6609529045343,100,100,56.4284132131985,25.7500080622608,18.9397281887691,24.2365836450423,17.6623445572814,19.6935178192904,31.1698113934496,16.7006920536502,30.2060795010617,37.5903068664518,21.7382469900896,6.1507141012756,0.928181284178657,20.3208459556872,34.7284399244394,2.72186404352122,12.6586110627088,2.64152643768182,4.47162904309004,11.9167064277242,14.3345062290365,6.69379014370325,28.7038906885967,28.4199375588891,7.77020220182281,14.2336295581721,0,19.7108198152618,8.2379417110495,100,31.3969297838429,15.2245559395681],"text":["Austria","Belgium","Bulgaria","Croatia","Cyprus","Czech Republic","Denmark","Estonia","Finland","France","Germany","Greece","Hungary","Ireland","Italy","Latvia","Lithuania","Luxembourg","Malta","Netherlands","Norway","Poland","Portugal","Romania","Slovakia","Slovenia","Spain","Sweden","Switzerland","United Kingdom","Australia","Bangladesh","Brunei Darussalam","Cambodia","China","India","Indonesia","Japan","Kazakhstan","Korea","Lao PDR","Malaysia","Mongolia","Myanmar","New Zealand","Pakistan","Philippines","Russian Federation","Singapore","Thailand","Vietnam"],"hoverinfo":["text","text","text","text","text","text","text","text","text","text","text","text","text","text","text","text","text","text","text","text","text","text","text","text","text","text","text","text","text","text","text","text","text","text","text","text","text","text","text","text","text","text","text","text","text","text","text","text","text","text","text"],"marker":{"color":"rgba(255,127,14,1)","size":15,"line":{"color":"rgba(255,127,14,1)"}},"showlegend":false,"error_y":{"color":"rgba(255,127,14,1)"},"error_x":{"color":"rgba(255,127,14,1)"},"line":{"color":"rgba(255,127,14,1)"},"xaxis":"x","yaxis":"y","frame":null}],"highlight":{"on":"plotly_click","persistent":false,"dynamic":false,"selectize":false,"opacityDim":0.2,"selected":{"opacity":1},"debounce":0},"shinyEvents":["plotly_hover","plotly_click","plotly_selected","plotly_relayout","plotly_brushed","plotly_brushing","plotly_clickannotation","plotly_doubleclick","plotly_deselect","plotly_afterplot","plotly_sunburstclick"],"base_url":"https://plot.ly"},"evals":[],"jsHooks":[]}</script> ??? Notes - --- ## Display the index on a Map ```r options(htmltools.dir.version = FALSE, htmltools.preserve.raw = FALSE, tibble.width = 60) COINr::iplotMap(ASEM, dset = "Aggregated", isel = "Conn") ``` <div id="htmlwidget-bfa30c58bc7a376ac3a9" style="width:2400px;height:1920px;" class="plotly html-widget"></div> <script type="application/json" data-for="htmlwidget-bfa30c58bc7a376ac3a9">{"x":{"visdat":{"54dd48e776b0":["function () ","plotlyVisDat"]},"cur_data":"54dd48e776b0","attrs":{"54dd48e776b0":{"z":{},"locations":["AUT","BEL","BGR","HRV","CYP","CZE","DNK","EST","FIN","FRA","DEU","GRC","HUN","IRL","ITA","LVA","LTU","LUX","MLT","NLD","NOR","POL","PRT","ROU","SVK","SVN","ESP","SWE","CHE","GBR","AUS","BGD","BRN","KHM","CHN","IND","IDN","JPN","KAZ","KOR","LAO","MYS","MNG","MMR","NZL","PAK","PHL","RUS","SGP","THA","VNM"],"text":{},"colorscale":"Greens","key":["AUT","BEL","BGR","HRV","CYP","CZE","DNK","EST","FIN","FRA","DEU","GRC","HUN","IRL","ITA","LVA","LTU","LUX","MLT","NLD","NOR","POL","PRT","ROU","SVK","SVN","ESP","SWE","CHE","GBR","AUS","BGD","BRN","KHM","CHN","IND","IDN","JPN","KAZ","KOR","LAO","MYS","MNG","MMR","NZL","PAK","PHL","RUS","SGP","THA","VNM"],"showscale":true,"reversescale":true,"alpha_stroke":1,"sizes":[10,100],"spans":[1,20],"type":"choropleth"}},"layout":{"margin":{"b":40,"l":60,"t":25,"r":10},"scene":{"zaxis":{"title":"get(isel)"}},"hovermode":"closest","showlegend":false,"legend":{"yanchor":"top","y":0.5},"title":"Connectivity","geo":{"showframe":false,"showcoastlines":true,"projection":{"type":"Mercator"},"showcountries":false,"bgcolor":"rgba(255,255,255,0)","showframe.1":false,"showland":true,"landcolor":"rgba(229,229,229,1)","countrycolor":"rgba(255,255,255,1)","coastlinecolor":"rgba(255,255,255,1)"}},"source":"mapclick","config":{"modeBarButtonsToAdd":["hoverclosest","hovercompare"],"showSendToCloud":false},"data":[{"colorbar":{"title":"Conn","ticklen":2,"len":0.5,"lenmode":"fraction","y":1,"yanchor":"top"},"colorscale":"Greens","showscale":true,"z":[55.4300181160593,63.9901642314296,44.7182237806758,50.1418764487134,51.7799237445284,52.0514584190484,57.0974895685389,53.5015247692406,52.1354995771061,50.9086036314699,54.3493607627902,45.3147665624098,52.3059845443379,55.2349061335593,47.7436568351534,47.9876649965869,48.1105857417282,66.5553995441179,59.5781922559545,61.9398723576584,57.4355053198748,48.2518738858441,49.0904971083045,43.8760223317633,50.404368988425,52.5277428069315,49.5383942064484,53.8747393274374,62.7879617199478,52.8104446454481,38.3648083260859,16.9684144112457,43.3508340450876,30.4092650759974,28.2395983229804,28.5951573015857,27.873507966043,36.96893253234,30.9665349519263,43.5652636498673,23.288220717606,42.3169256763717,30.8766429111389,20.5882278542428,39.2362179822402,21.9596090936419,27.1281223982067,29.985845667267,61.9069528310472,32.027377920802,30.954411649716],"locations":["AUT","BEL","BGR","HRV","CYP","CZE","DNK","EST","FIN","FRA","DEU","GRC","HUN","IRL","ITA","LVA","LTU","LUX","MLT","NLD","NOR","POL","PRT","ROU","SVK","SVN","ESP","SWE","CHE","GBR","AUS","BGD","BRN","KHM","CHN","IND","IDN","JPN","KAZ","KOR","LAO","MYS","MNG","MMR","NZL","PAK","PHL","RUS","SGP","THA","VNM"],"text":["Austria","Belgium","Bulgaria","Croatia","Cyprus","Czech Republic","Denmark","Estonia","Finland","France","Germany","Greece","Hungary","Ireland","Italy","Latvia","Lithuania","Luxembourg","Malta","Netherlands","Norway","Poland","Portugal","Romania","Slovakia","Slovenia","Spain","Sweden","Switzerland","United Kingdom","Australia","Bangladesh","Brunei Darussalam","Cambodia","China","India","Indonesia","Japan","Kazakhstan","Korea","Lao PDR","Malaysia","Mongolia","Myanmar","New Zealand","Pakistan","Philippines","Russian Federation","Singapore","Thailand","Vietnam"],"key":["AUT","BEL","BGR","HRV","CYP","CZE","DNK","EST","FIN","FRA","DEU","GRC","HUN","IRL","ITA","LVA","LTU","LUX","MLT","NLD","NOR","POL","PRT","ROU","SVK","SVN","ESP","SWE","CHE","GBR","AUS","BGD","BRN","KHM","CHN","IND","IDN","JPN","KAZ","KOR","LAO","MYS","MNG","MMR","NZL","PAK","PHL","RUS","SGP","THA","VNM"],"reversescale":true,"type":"choropleth","marker":{"line":{"color":"rgba(31,119,180,1)"}},"_isNestedKey":false,"frame":null}],"highlight":{"on":"plotly_click","persistent":false,"dynamic":false,"selectize":false,"opacityDim":0.2,"selected":{"opacity":1},"debounce":0},"shinyEvents":["plotly_hover","plotly_click","plotly_selected","plotly_relayout","plotly_brushed","plotly_brushing","plotly_clickannotation","plotly_doubleclick","plotly_deselect","plotly_afterplot","plotly_sunburstclick"],"base_url":"https://plot.ly"},"evals":[],"jsHooks":[]}</script> ??? Notes - --- ## Present summary table. ```r COINr::getResults(ASEM, tab_type = "Summary") %>% head(7)%>% knitr::kable() ``` |UnitCode |UnitName | Index| Rank| |:--------|:-----------|-----:|----:| |CHE |Switzerland | 68.62| 1| |NLD |Netherlands | 65.17| 2| |DNK |Denmark | 65.04| 3| |NOR |Norway | 64.61| 4| |BEL |Belgium | 64.11| 5| |SWE |Sweden | 63.22| 6| |AUT |Austria | 62.36| 7| ??? Notes - --- ## Export Results Get tables to present (the highest levels of aggregation are the first columns, rather than the last, and it is sorted by index score). ```r # Write full results table to COIN COINr::getResults(ASEM, tab_type = "FullWithDenoms", out2 = "COIN") # Export entire COIN to Excel COINr::coin2Excel(ASEM, "ASEM_results.xlsx") ``` ??? Notes - --- class: inverse, left, middle # Conclusion. Reproducibility to ensure transparency * Strictly Use "curated & published" data for your sub-indicators - Data should be available on Humanitarian Data eXchange @ data.humdata.org * Document your process with R Code & put it into Github - The data structure required for the packages allows for better discoverability! * Call for peer and/or independent review to cover yourself! when using scripted reproducible analysis, this should be very quick!!!